As of Sunday, the European Union can ban AI systems that pose an “unacceptable risk” or cause harm. Regulators now have the authority to enforce strict compliance under the EU’s AI Act.

February 2 marks the first major deadline for the AI Act, a regulatory framework designed to control AI use across industries. The European Parliament approved the law last March after years of development. It officially took effect on August 1, and now, companies must meet the first compliance requirements.

AI Risk Levels and Compliance

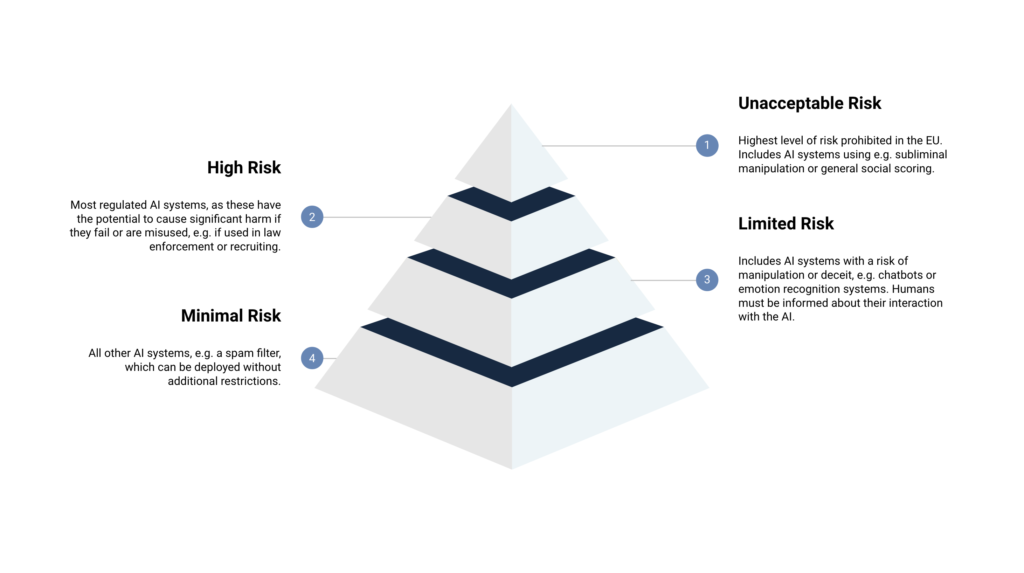

The AI Act classifies AI systems into four risk categories:

- Minimal risk (e.g., spam filters) – No regulation required.

- Limited risk (e.g., customer service chatbots) – Subject to light oversight.

- High risk (e.g., AI in healthcare) – Strict regulatory controls apply.

- Unacceptable risk – Completely banned in the EU.

This month’s compliance deadline focuses on banning unacceptable risk AI applications. These include:

- AI used for social scoring (e.g., risk profiling based on behavior).

- AI that manipulates decisions through subliminal or deceptive tactics.

- AI that exploits vulnerabilities related to age, disability, or socioeconomic status.

- AI that predicts crimes based on appearance.

- AI that uses biometrics to infer characteristics like sexual orientation.

- AI that collects real-time biometric data in public for law enforcement.

- AI that analyzes emotions at work or school.

- AI that scrapes images from the internet or security cameras to build facial recognition databases.

Harsh Penalties for Non-Compliance

Companies using these banned AI applications in the EU face severe penalties. Regardless of their headquarters, they could be fined up to €35 million (~$36 million) or 7% of their annual revenue from the previous year—whichever is higher.

The AI Act is setting a new global standard. Businesses operating in the EU must now rethink how they develop and deploy AI.

Preliminary pledges

Last September, more than 100 companies signed the EU AI Pact, a voluntary pledge to follow the AI Act’s principles before its official enforcement. Signatories, including Amazon, Google, and OpenAI, committed to identifying AI systems that might fall under the Act’s high-risk category.

However, some major tech firms declined to participate. Meta and Apple chose not to sign, as did French AI startup Mistral, a vocal critic of the AI Act.

Still, skipping the Pact doesn’t mean these companies will ignore compliance. They must follow the law, including the ban on unacceptably risky AI. According to Sumroy, most companies won’t engage in these banned practices anyway.

“For organizations, a key concern around the EU AI Act is whether clear guidelines, standards, and codes of conduct will arrive in time,” Sumroy said. “Crucially, they need clarity on compliance. However, working groups are currently meeting their deadlines for the developer code of conduct.”

As regulations take shape, businesses must stay ahead to ensure compliance and avoid penalties.

Possible exemptions

The AI Act permits law enforcement to use biometric systems in public spaces under strict conditions. These systems can assist in targeted searches, such as locating an abduction victim or preventing a specific, substantial, and imminent threat to life. However, authorities must obtain approval from the appropriate governing body. The Act also prohibits law enforcement from making decisions that adversely impact individuals based solely on AI outputs.

The law includes exceptions for emotion-detecting AI in workplaces and schools but only when justified by medical or safety reasons, such as therapeutic applications.

Unresolved Questions Around Implementation

The European Commission plans to release additional compliance guidelines in early 2025, following a stakeholder consultation last November. However, those guidelines remain unpublished, leaving businesses uncertain about key details.

According to Sumroy, the AI Act’s interaction with other existing regulations also remains unclear. As enforcement approaches, companies must prepare for potential legal overlaps.

“AI regulation doesn’t exist in isolation,” Sumroy said. “Other frameworks, like GDPR, NIS2, and DORA, will intersect with the AI Act, creating compliance challenges—especially with overlapping incident reporting requirements. Understanding how these laws fit together is just as important as understanding the AI Act itself.”

With enforcement on the horizon, organizations must stay ahead of evolving regulations to ensure full compliance.